Google Doesn't Want You to Click – And That's a Big Problem for News

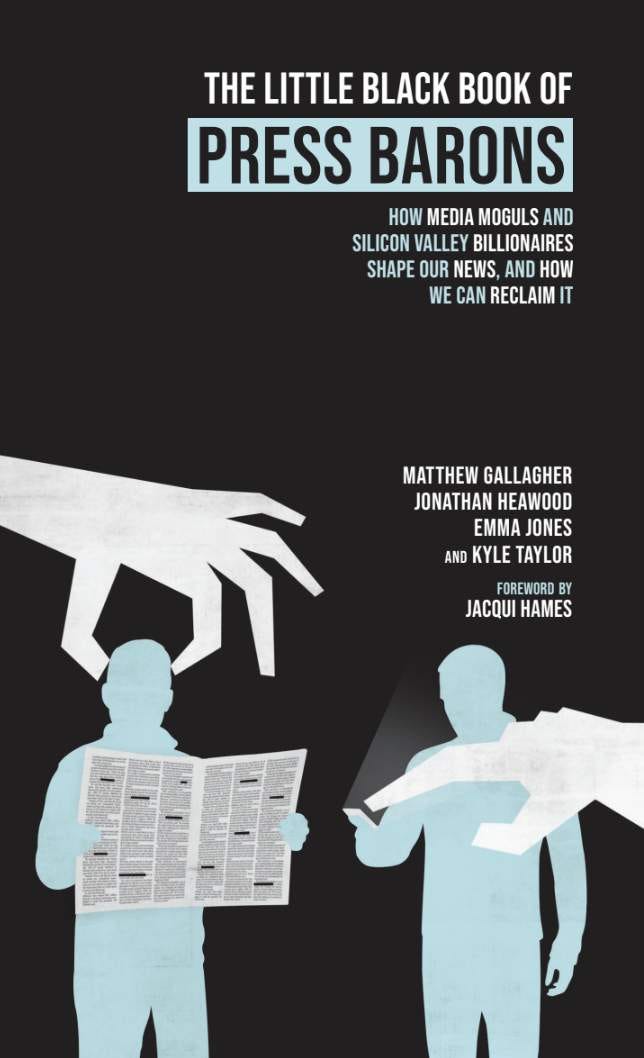

Kyle Taylor, co-author and editor of 'The Little Black Book of Press Barons' on how tech companies' AI models are not just curating the news, but defining truth itself.

New research shows that 69% of Google searches now end without a single click. That means almost seven out of every ten times someone turns to the world’s most-used search engine, they don’t actually visit any of the websites listed in the results. Instead, they get a pre-packaged answer directly from Google — increasingly powered by its Gemini AI model.

This isn’t just a shift in user behaviour. It’s a seismic change in how people access information — and it’s already proving catastrophic for news organisations. Publishers are still doing the work: reporting, verifying, editing. But audiences are no longer reaching them. Instead, their facts are being scraped and reassembled into Gemini’s answer — unattributed, unaccountable, and unchecked.

As I write in The Little Black Book of Press Barons, this new system gives tech platforms “the ability to influence and filter the majority of the world’s information with just a few keystrokes.” These companies aren’t merely curating the news. They’re defining truth itself.

And while it may feel like this signals the decline of traditional press power, the opposite is also true. In an algorithmic ecosystem that feeds off scale and sensationalism, the legacy giants — like the Daily Mail and Murdoch’s empire — become even more powerful. Their headlines, already engineered for virality, are now the default inputs for AI summaries, giving them extraordinary influence over what gets surfaced and what gets ignored.

It’s a strange paradox I describe in the book: although the overall power of traditional press barons has declined in absolute terms, within the digital world, they are relatively more important in the vast sea of crap that now floats through the waterways of social media. In other words, when everything is chaos, the loudest voices still dominate — and the loudest are still owned by the same handful of Press Barons who’ve been shaping public discourse for decades.

All of this is made worse by the fact that tech platforms treat all content as equal — as long as it generates engagement. That’s the business model. As I explain, “the entire construction of the platform itself is based solely on keeping you there as long as possible by nearly any means necessary.” If outrage works better than truth, then outrage wins. If lies get more clicks than facts, the algorithm will oblige.

Even when social platforms have tried to course-correct, their efforts have been half-hearted. In the days after the 2020 US presidential election, Meta briefly boosted “news ecosystem quality” — elevating outlets with actual editorial standards. But the effect on engagement was so detrimental, they scrapped the tweak after just a few days.

Now, they’ve gone even further. Earlier this year, Mark Zuckerberg announced Meta would “get rid of fact-checkers” altogether, replacing them with a “Community Notes” system where unpaid users could annotate misleading posts. “The company will catch less bad stuff,” Zuckerberg admitted — as if they were catching much to begin with.

Meanwhile, right-wing disinformation networks have adapted even faster than regulators. Bad actors have learned to optimise their content using the same methods tech platforms reward: emotion, repetition, and confirmation bias. In one post-election analysis, almost half of the top-performing US news posts on Facebook came from known right-wing disinformation sources. Factual reporting from the likes of NPR and CNN? Just 10%.

This has consequences — real, measurable ones. Nearly a year after the 2020 election, 53% of US Republicans still believed the vote was rigged. By 2023, that number had climbed to 68%.

And here’s the kicker: people trust what they see. In surveys, users claim to distrust “the news,” but when asked if they trust the news they personally read, the numbers shoot up. Everyone thinks they’re the savvy one. It’s everyone else who’s being duped.

This isn’t a uniquely American problem. In the UK, we’ve seen similar patterns — from the collapse of local news to the rise of Facebook groups as hubs of toxicity and misinformation. Even some reputable media outlets, deeply flawed though they may be, are being drowned out by a flood of pseudo-news optimised for engagement and fed to users by design.

Meanwhile, Elon Musk’s X — formerly Twitter — is accelerating in the opposite direction, reinstating far-right accounts and personally amplifying conspiracy theories. When riots erupted in the UK in 2024, fuelled by false rumours and anti-immigrant sentiment, Musk tweeted that a “civil war is inevitable.” He wasn’t just commenting. He was inciting.

In moments like this, the inadequacy of existing regulation becomes painfully clear. The UK’s much-hyped Online Safety Act failed to do anything to curb this flood of disinformation — not because the problem is too big, but because the political will to take on tech barons is still so weak. “If regulation meant to protect society can’t even help quell the most extreme cases – like violent race riots – it seems fairly obvious the tech barons have won, even against democratically elected governments,” I argue in the book.

This is the new information order. Not a level playing field of free expression, but a hierarchy where truth is optional, context is dead, and power lies with whoever owns the platform.

As I posit in The Little Black Book of Press Barons, we may have already reached a point where “the tech barons – too strong, their empires too big to rein in – are left with near-absolute power to define truth and shape our world.”

You can get the whole story — and the full scope of what’s at stake — from four experts in the field in The Little Black Book of Press Barons, available now from Byline Books.

Duck Duck Go?